I am Mariia, a Data Engineer in the Data Product team at FELD M. In April 2023, my colleague and I visited Berlin to attend the famous PyCon – the largest European convention for the discussion and promotion of the Python programming language. Every year it gathers Python users and enthusiasts from all over the world and gives them a platform to share information about new developments, exchange knowledge, and learn best practices from each other.

In 2023, PyCon Berlin was merged with PyData, a forum of users and developers of data analysis tools. It lasted for three days and included so many presentations that it would take a team of at least seven people to attend all of them. Fortunately, the sessions were recorded, and now, after some months, they are available for everyone. You will find a link to the YouTube playlist of PyCon Berlin 2023 talks at the end of this article.

But first, I would like to offer you my own overview of the presentations that we attended and liked the most. Please remember that this overview is based on personal opinion, so it may be biased and different from yours. Feel free to add your perspective in the comments!

1. Pandas 2.0 and beyond

For whom: Software and Data Engineers, Data Scientists, and everyone who works with Pandas (except animal keepers in public zoos, maybe)

Why it’s worth watching: The talk not only covers the changes that were implemented in Pandas 2.0 in comparison with Pandas 1.0, but also touches on the topic of PyArrow which is actively used in the latest version of Pandas. (If you are curious what PyArrow is, there is a link to the talk about it at the end of this list).

Our verdict: Interesting topic, very relevant for our work, Rating: 9/10

Details: https://pretalx.com/pyconde-pydata-berlin-2023/talk/DB3KC7/

Video: https://www.youtube.com/watch?v=7QQZ1hrHG1s&list=PLGVZCDnMOq0peDguAzds7kVmBr8avp46K

2. Large Scale Feature Engineering and Data Science with Python & Snowflake

For whom: Data Scientists, Data Engineers, and those who are interested in Snowflake

Why it’s worth watching: This talk was essentially an introduction to Snowpark, Snowflake’s framework for machine learning development that can work with big data in Python, Scala, or Java.

Our verdict: Good presentation, but you wouldn’t get too much out of it if you don’t work with Snowflake on a regular basis. Rating: 7/10

Details: https://pretalx.com/pyconde-pydata-berlin-2023/talk/3TYND7/

Video: https://www.youtube.com/watch?v=mpY7auHK3zw&list=PLGVZCDnMOq0peDguAzds7kVmBr8avp46K

3. Raised by Pandas, striving for more: An opinionated introduction to Polars

For whom: Software and Data Engineers, Data Scientists, and everyone who works with Pandas (but is striving for more)

Why it’s worth watching: The talk gives a really good overview of Polars and inspires you to test it as a more powerful alternative to Pandas.

Our verdict: The speaker was passionate about the framework and a very engaging speaker. The slides were great fun! Above all, the topic of Polars is quite hot at the moment, so definitely: Rating: 10/10

Details: https://pretalx.com/pyconde-pydata-berlin-2023/talk/Z8PESY/

Video: https://www.youtube.com/watch?v=7xcUvzERwx0&list=PLGVZCDnMOq0peDguAzds7kVmBr8avp46K

4. Common issues with Time Series data and how to solve them

For whom: mostly Data Scientists, but still relevant for anyone working with data

Why it’s worth watching:This talk walks you through four common issues with Time Series data and gives you hints on how to resolve them.

Our verdict: The presentation was quite good, but covered relatively basic things, hence: Rating: 7/10

Details: https://pretalx.com/pyconde-pydata-berlin-2023/talk/ZRAFKA/

Video: https://www.youtube.com/watch?v=sSF1uzK6DuI&list=PLGVZCDnMOq0peDguAzds7kVmBr8avp46K

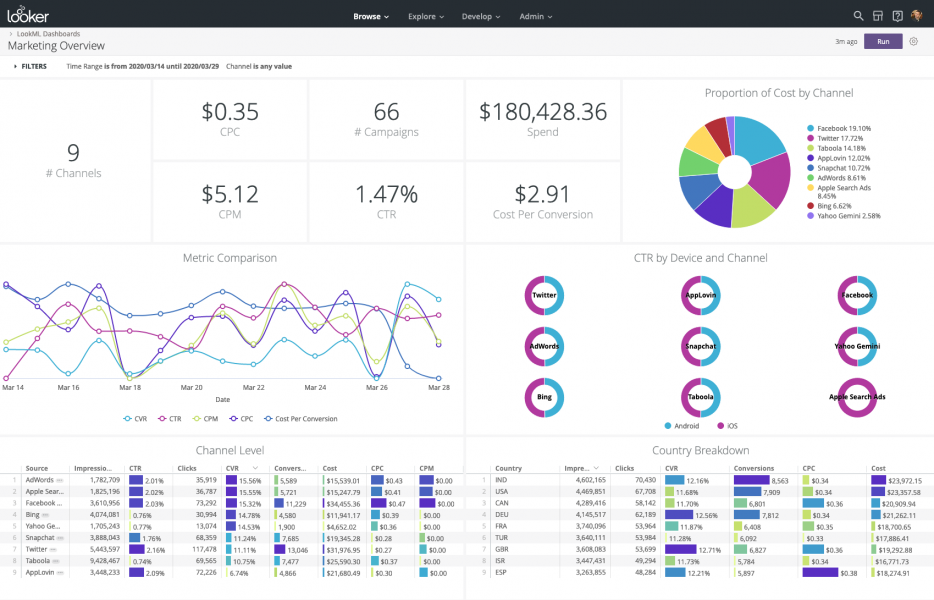

5. WALD: A Modern & Sustainable Analytics Stack

For whom: Data Engineers, BI specialists, and companies and teams who aim to become more data-driven

Why it’s worth watching: The presentation was dedicated to the tools you can use for building a modern reporting pipeline, and WALD, a solution in which these tools are already combined.

Our verdict: We were really curious to check out which technologies our colleagues from other companies use for building reporting pipelines. Also, I have to admit, the slides were very cool! Rating: 8/10

Details: https://pretalx.com/pyconde-pydata-berlin-2023/talk/TP7ABB/

Video: https://www.youtube.com/watch?v=7GfbA6_a09I&list=PLGVZCDnMOq0peDguAzds7kVmBr8avp46K

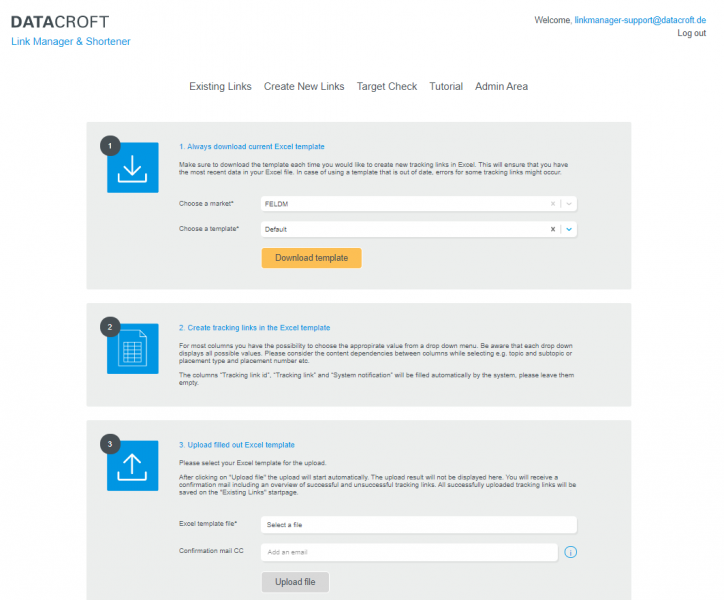

If you are looking for a ready-to-use solution that would help you extract more value from your data, check out the development of our Data Product team: Datacroft Analytics Stack and contact us for more details!

6. Towards Learned Database Systems

For whom: Anyone working with databases

Why it’s worth watching: It’s a presentation of the new direction of so-called Learned Database Management Systems (DBMS) where core parts of DBMS are being replaced by machine learning models, which has shown significant performance benefits.

Our verdict: The topic is exciting per se, but kudos to the speaker – he made it even better with his excellent and well-balanced presentation! Rating: 10/10

Details: https://pretalx.com/pyconde-pydata-berlin-2023/talk/JZSYA3/

Video: https://www.youtube.com/watch?v=VtL6Y4x10O0&list=PLGVZCDnMOq0peDguAzds7kVmBr8avp46K

7. Rusty Python: A Case Study

For whom: Software and Data Engineers working with Python

Why it’s worth watching: An overview of Rust and its benefits for Python developers. Exciting presentation about implementing a solution in Rust and integrating it with a Python application using PyO3.

Our verdict: Very interesting topic and excellent presentation, Rating: 10/10

Details: https://pretalx.com/pyconde-pydata-berlin-2023/talk/LMGF8V/

Video: https://www.youtube.com/watch?v=Y5XQR0wUEyM&list=PLGVZCDnMOq0peDguAzds7kVmBr8avp46K

8. Lorem ipsum dolor sit amet

For whom: Everyone working with software and data

Why it’s worth watching: The talk is dedicated to the process of finding meaningful test data for your software. The importance of this topic can’t be over-estimated, so those who work with data on a regular basis should definitely check it out.

Our verdict: Fun slides, but I’ve got a feeling that the main message was a bit diluted by the amount of jokes and examples. Still, it was a useful and engaging session. Rating: 8/10

Details: https://pretalx.com/pyconde-pydata-berlin-2023/talk/HJ9J7Z/

Video: https://www.youtube.com/watch?v=ulBqrMyVSMM&list=PLGVZCDnMOq0peDguAzds7kVmBr8avp46K

9. Unlocking Information – Creating Synthetic Data for Open Access

For whom: Data Scientists, but might be interesting to anyone working with data

Why it’s worth watching: If you’ve ever wondered how to make the data you used in your work public without disclosing any personal information, this presentation might be exactly what you are looking for.

Our verdict: The topic is a bit niche, though still good for general professional development. Rating: 7/10

Details: https://pretalx.com/pyconde-pydata-berlin-2023/talk/J9KRKZ/

Video: https://www.youtube.com/watch?v=N1i_Z-WKaRs&list=PLGVZCDnMOq0peDguAzds7kVmBr8avp46K

10. Most of you don’t need Spark. Large-scale data management on a budget with Python

For whom: Software and Data Engineers, Data Scientists

Why it’s worth watching: The talk covered a lot of aspects and technologies that can help you manage large volumes of data and build scalable infrastructure for its processing.

Our verdict: The speaker asks some questions that might make you feel a bit dumb and trigger an episode of impostor syndrome, but besides that the talk was great! Rating: 9/10

Details: https://pretalx.com/pyconde-pydata-berlin-2023/talk/V9HBUU/

Video: https://www.youtube.com/watch?v=OsYcsv4VkO8&list=PLGVZCDnMOq0peDguAzds7kVmBr8avp46K

11. Apache Arrow: connecting and accelerating dataframe libraries across the PyData ecosystem

For whom: Software and Data Engineers, Data Scientists

Why it’s worth watching: If you have heard about PyArrow or Apache Arrow before (e.g., while watching the “Pandas 2.0 and beyond” talk) and you want to dive deeper and find out more about this technology, this presentation is for you. If you haven’t heard of PyArrow before, this presentation is even more perfect for you.

Our verdict: Arrow is fantastic, but the talk was not too light-hearted, so it requires some concentration. Rating: 8/10

Details: https://pretalx.com/pyconde-pydata-berlin-2023/talk/H7ZCWK/

Video: https://www.youtube.com/watch?v=h7F3Rr8Ozgw&list=PLGVZCDnMOq0peDguAzds7kVmBr8avp46K

12. Postmodern Architecture – The Python Powered Modern Data Stack

For whom: Data Engineers, BI specialists, companies and teams who aim to become more data-driven

Why it’s worth watching: The speaker and his team basically built a competitor of WALD (check #5 in the list). They offer it as a set of technologies forming a flexible stack that can deal with integrating data and extracting value from it.

Our verdict: Again, if you are curious about technologies that can be used for building a modern reporting pipeline, you should watch it. And as a fan of the Brooklyn 99, I can’t help but admire the slides. Rating: 8/10

Details: https://pretalx.com/pyconde-pydata-berlin-2023/talk/A7B8P8/

Video: https://www.youtube.com/watch?v=na7yqvz5-B4&list=PLGVZCDnMOq0peDguAzds7kVmBr8avp46K

As already mentioned above, there were many more exciting presentations at PyCon Berlin 2023. You can find the full list of sessions with descriptions in the conference schedule page. And, fortunately, the majority of the recordings are now available to everyone on YouTube!

To wrap it up, I can say that PyCon is a great event for everyone who is passionate about programming, data, and, of course, Python. It inspires you to try new things and re-think your approaches, it brings you closer with your fellow developer community and gives you joy of learning from the best experts of your field. And of course, it’s a perfect reason to visit the vibrant city of Berlin and enjoy its amazing local food, nightlife scene, rich history and some of the most remarkable sights! We are looking forward to PyCon 2024, and hope that after this article you do too!

If you are interested in our work within the Data Product Team, you can find more information here. We also showcase some of our data engineering & architecture projects here.