This year, FELD M participated for the third time in the “SHIFT – Conference on Digital Ethics”, which took place on 20 April at the X-TRA in Zurich/Switzerland. After two digital conferences, the approximately 220 conference participants were able to discuss in person again this year. The conference featured numerous high-profile speakers who presented their expertise and practice-oriented perspectives on the most pressing ethics issues of digitalisation; in several breakout sessions, individual topics could be analysed in more depth and interactively.

The initiator and host of SHIFT Cornelia Diethelm (left), the closing panel and the moderator Patrizia Laeri (centre). (c) Photo: Louis Rafael Rosenthal.

The topics of this year’s SHIFT conference

The discussions focused on the challenges and opportunities presented by the rapid technological advances of recent years and months – above all, of course, artificial intelligence and machine learning, robotics, manipulative design patterns on websites and apps, but also the representation of artificial intelligence in the media or the increasingly important topic of face recognition. Sustainability as a competitive advantage was also discussed, as was the controversial AI Act. Of course, data protection was not neglected either, as it forms the interface between corporate compliance and corporate ethics in many companies.

The Programme.

The take-aways for FELD M

At FELD M, we have been dealing with digital ethics topics for several years – from AI ethics to data for good to dark patterns in consent management and to technical solutions for clean data sharing. It was therefore all the more exciting that we found our views partly confirmed, but also partly confronted with surprising insights.

Digital Literacies

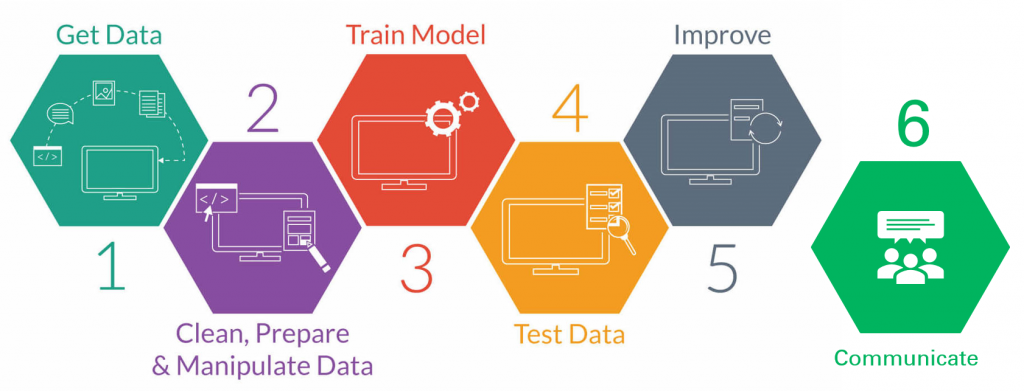

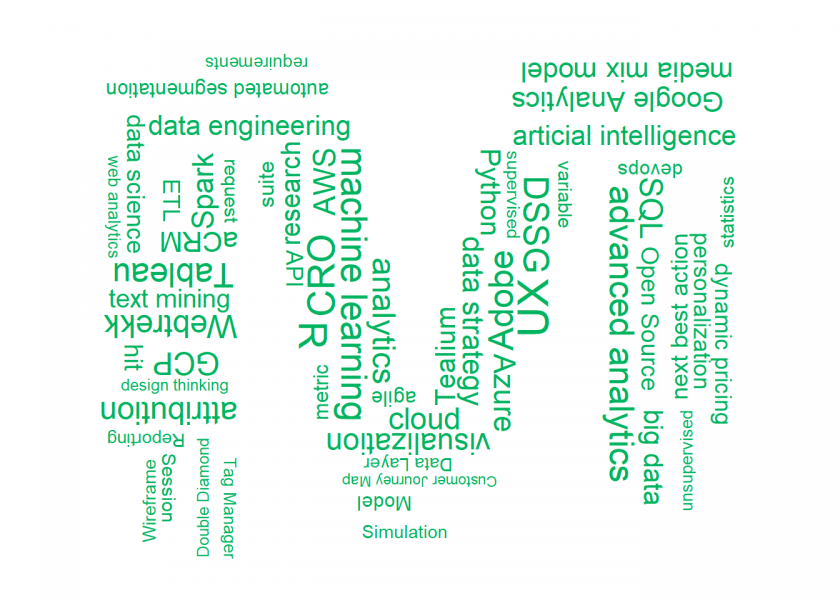

AI-literacy, data-literacy, tool-literacy and privacy-literacy are only a few of many literacies, i.e. competences that should be part of the basic skills of every person working in digital (or non-digital!) professions in the 21st century. After all, we all come into contact with new technologies in one way or another. We need to be able to assess what consequences the use of “artificial intelligence” has on their (working) life. We have to be able to read diagrams and tables. This was shown not least by the Covid19 pandemic. We need to know how data is collected and used so that we can either use it as a basis for data-driven decisions at work or to protect ourselves from data misuse in our private lives. We need to be able to deal with different applications: understand how to use them, where they try to trick us, how to get applications to do what we want them to do, or how to prevent exactly the opposite from happening. This also includes a conscientious impact assessment when using digital technologies.

These literacies were mentioned again and again in the keynote speeches, and even away from the official conference programme, there was lively discussion about how these literacies can be strategically and consistently promoted among the population, but especially in companies. With its services, FELD M helps many clients to work in a more data-driven way, but for this to happen, companies need the aforementioned literacies that enable a data-driven organisation in the first place – even before deciding on the appropriate tool stack or the best tracking setup.

Dark Patterns and Deceptive Designs

At least since the appearance of consent banners on all websites, everyone has had to deal with so-called dark patterns – manipulative techniques that make it unattractive NOT to give consent to data processing. Through misleading wording, many banner levels that you would have to click through to object to the processing, or through visual highlighting of options that are good for the companies but rather bad for the privacy-savvy users. (You can find a dedicated article and whitepaper to download on our blog, introducing some of the most common Dark Patterns in Consent Management.) However, these manipulative design patterns are not only found on consent banners, but also on travel portals, where it is regularly displayed that there is only one room left in the selected category and that you should therefore rather book very quickly.

A study was presented at the conference that brought surprising insights: most people do not perceive the manipulation as bad at all, but rather mentioned praisingly, for example, that a transport insurance with costs was smuggled into the shopping basket when shopping online. What the findings of this study mean will have to be discussed. What is clear is that digital literacy needs to be addressed here as well, so that people are informed enough to recognize when they are being manipulated.

Data Rooms and Data Sharing

Trusted Data Centres, Trusted Data Services, Data Clean Rooms, Data Rooms and Data Sharing – if you think you have lost track of these terms, you are not alone. The terms have been appearing with increasing frequency for some time and a clear definition is difficult – also because fundamentally different concepts can lie behind them and in part highly complex technologies such as differential privacy are used. Established use cases and practical examples are also difficult to access at the moment.

At FELD M, we have developed a common understanding of the technologies, also because they can be used by our clients to compensate for a poorer data basis due to ad blockers or low consent rates in consent management. A detailed breakdown would go too far here, but we took away from the conference that there are numerous public efforts in this area that could provide standards and the necessary security for data sharing in the future. It is important to continue to follow these developments.

Our colleague André Hellemeier in one of the Breakout-Sessions. (c) Photo: Louis Rafael Rosenthal.

Conclusion

We have only selected a few exemplary topics that are directly related to our work at FELD M and to what we are currently dealing with on a strategic level. The conference has established contact with other experts who are confronted with the same problems and has made it possible to compare our own ideas and developments with those of other experts. This comparison with different perspectives is crucial for a technological-ethical compass to be able to adjust itself again and again and in the best possible way. In 2024, we will continue to look for new perspectives, innovative ideas and new insights at the Shift, because “machines” – in the broadest sense – penetrate and determine our lives more and more and therefore need morals.

If you have any questions or want an exchange about the topics of data ethics and corporate digital responsibility, our team is happy to hear from you: data-ethics@feld-m.de.

You want to stay up to date on these topics and see how we put digital ethics into practice? In our FELD M Newsletter, we provide (ir)regular updates & information on a wide variety of topics of our and our client’s day to day business. Subscribe here!