A traditional data warehouse is replaced – an EDP-lovestory.

on 27.08.2020 by Bernhard Janetzki

Let’s get this party started – A promising first contact

In mid-March 2019, we received a message from Christian Weber, the COO of Freeletics, via the contact form of our company website. A former colleague from Switzerland had recommended us because they needed support in the redesign and development of a solution in AWS to replace the existing data warehouse.

In love with Data – A slight tingling sensation

In the meantime, I sometimes like to call myself an IT grandpa. For more than 25 years now I have been building various things on the net. In the beginning it was the Linux router at my family home, which served the family with internet over the LAN and helped me to understand the basic functionality of the internet and its protocols like TCP, UDP or HTTP. Today I support our customers with my team in designing and implementing applications for the Internet.

Helping the well-known startup Freeletics to replace their DWH and design a new, future-proof architecture immediately triggered a slight tingling sensation in me. The amount of data to be processed had to be immense!

Although we had already implemented projects on-premise and in the Azure cloud, including what are now often referred to as “big-data” projects, we had only gained basic experience with AWS at that time. We therefore did not want to call ourselves experts in this area. But with enough respect for the task, we decided to take a closer look.

It’s a match! – A quick start

After a first meeting with Christian and his data engineering team, it soon became clear that we were going to work together.

The team understood what was important: an in-depth understanding of technologies and their interaction in general, as well as experience in designing and implementing applications.

Understanding the past – Understanding the existing system

To develop a new, future-proof system, we first had to understand the existing system. The team helped us to get an insight into the current system that Freeletics had been supplying with data up to that point:

- Talend as ETL tool

- Mainly batch based data processing

- EC2 Linux machines as ETL hosts

- Redshift databases as DWH

- Data sources and their target formats in the DWH were identified.

Due to the rapid growth of Freeletics customers, to currently more than 42 million, and the resulting data volumes, this solution had reached its limits.

Building something new together – Designing something new

Based on the insights into the existing DWH and the predicted growth, the following requirements were determined:

What do you need? – The requirements

Non-functional requirements

- Events are provided via HTTP

- More than 100 events per second must be processed

- Data processing almost in real time

- A central database will store Freeletics user data including nested attributes

- Events should be enriched with user attributes from the database

- The application should be scalable, reliable, expandable and easy to maintain

- AWS as a cloud provider

- The operation of the infrastructure should be performed mostly independently by the Data Engineering Team

- The data storage is to take place on an S3 DataLake

- Python as primary programming language

- PySpark for processing large amounts of data

Functional requirements

- The Data Analytics team should be able to perform more complex analyses with Spark by accessing raw data

- Teams from product development to finance should have the possibility to independently create generic but complex analyses and simple visualizations

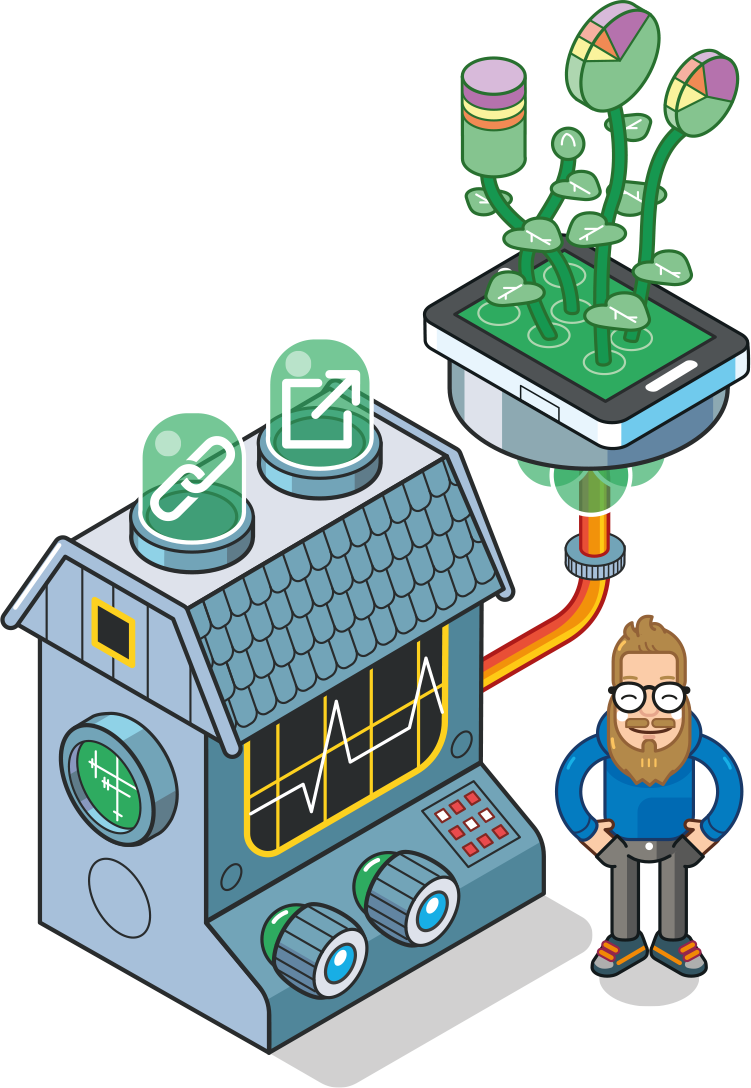

How do we get there? – The new architecture

Data storage of user data and their attributes

Due to high read and write accesses and a large number of attributes, including nested attributes, we decided to use a DynamoDB as data storage for Freeletics users.

The fast response time and scalability of DynamoDB were also decisive factors for this choice.

Event processing

Data is received via an API gateway and processed by Lambda functions.

Processing means the enrichment of events by user attributes (stored in the DynamoDB) as well as GDPR-compliant masking of sensitive data such as IP addresses, email addresses, etc.

A general problem with event-based processing is error handling. Something usually goes wrong, no matter how stable the systems are designed. 🙂

If events are faulty, our software has errors. If a target system is temporarily unavailable, an event cannot be processed successfully at that moment. AWS offers SQS dead-letter queues. for this kind of problems.

Source: https://de.wikipedia.org/wiki/Notfallspur_(Gef%C3%A4lle)#/media/Datei:HGG-Zirlerbergstra%C3%9Fe-Notweg.JPG

If an error occurs during processing, incorrect events are moved to an SQS. Monitoring the queues with a subsequent reprocessing function allows you to process incorrect events again without losing them.

Data management of the events

Events are stored in S3 partitioned by event type/YYYY/MM/DD. The storing was done in the Lambda functions mentioned before.

A downstream DataBricks job ensures that individual events are combined into parquet files. This allows a faster and more cost-effective access to the data.

More Data

Ad Spend data was loaded daily via classic Python Batch Jobs via an API, or in the case of larger amounts of data, such as CRM data, processed via Spark Jobs. The resulting data was also provided to the S3 DataLake.

Data Access

For the Data Analytics Team

The individual events in DataLake or the events aggregated by DataBricks can be accessed by Spark, which also allows for the efficient analysis of large amounts of data.

For teams from product development to finance

Freeletics decided on the “Amplitude” tool to make data accessible to product managers*, for example. For this purpose, events were transferred to Amplitude by forwarding them to an “export” lambda. Evaluations can thus be carried out almost in real time. Identifiers between the different event types allow complex evaluations for product development and controlling.

Hand in hand for data – Implementing together

We implemented the previously planned system together with Freeletics’ data engineering team.

After a development time of about 8 months, all required functionalities were implemented in the new system and available for the users. The old DWH could be shut down

Afterwards the Data Engineering Team of Freeletics took over the operation, maintenance and further development of the system completely. FELD M supports the team until today, July 2020.

A bright future – A conciliatory outlook

As developers* we should not be put off or even intimidated by what one of my best friends likes to describe as “technology bombshells”. Extensive experience with and a deep understanding of technology allows us to develop good applications – be it in Azure, AWS, on-premise or any other environment.

After all, our applications are still based on the good old protocols such as TCP, UDP or HTTP.